Corporate artificial intelligence and robotics are no longer just futuristic concepts but are becoming an integral part of everyday business operations. They enable companies to improve efficiency, productivity, and responsiveness to change. They also help with product innovation. One of the key aspects of this integration is the use of sensor AI, which allows for data collection and analysis using a variety of sensors and devices.

Let’s take a look at some examples of how sensory AI can transform various industries and innovate businesses. The following examples illustrate practices already in use today:

• Industrial automation: In industrial automation, sensor AI is used to monitor and control manufacturing processes. Sensors can monitor essential parameters such as temperature, pressure, or humidity, but also detect microscopic changes in the environment that could signal potential issues. For example, sensors detecting changes in air pressure can warn of impending equipment failure, allowing maintenance to be carried out before the problem becomes serious.

• Medicine: Sensors enable the monitoring of heart rate, blood pressure or glucose levels. This data can be analysed by artificial intelligence, for example to diagnose and monitor health conditions or to predict the future course of a disease or a patient’s response to treatment. Sensory AI detects patterns of changes in blood pressure that may predict when the next hypertensive crisis will occur and warn the doctor or patient well in advance.

• Autonomous vehicles: Sensors are crucial for collecting data about the surrounding environment. In addition to traditional sensors such as lidar, radar and cameras, modern vehicles often use other advanced sensors such as ultrasonic sensors to detect obstacles in the vicinity of the vehicle or sensors to measure road quality. This information is essential for the proper functioning of autonomous systems, which must be able to quickly and accurately respond to various situations on the road to ensure safe driving.

• Smart cities: Sensor AI in smart cities is used to monitor traffic, air quality, noise levels, and other factors affecting the environment. Modern sensors measure essential parameters and identify specific pollution or problems in public infrastructure. For example, a sensor network in a city can detect gas leaks in the distribution network and automatically alert the relevant authorities, enabling rapid action.

• Wearables: Sensors in electronics such as smart watches or fitness bracelets collect data on movement, heart rate and other physiological parameters. This information is not only used for personal monitoring and improving the health of users but can also be shared in the form of anonymised data with research institutions or public health organisations to analyse and predict epidemics or to track population health trends.

Why don’t Czech companies use AI?

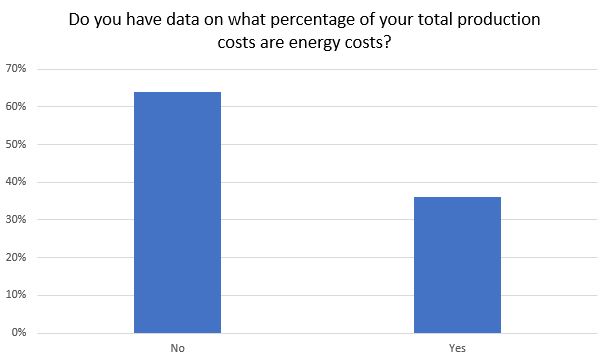

Despite all these potential benefits, many Czech companies are still hesitant to implement AI into their processes. There are several reasons for that.

Firstly, there is a shortage of qualified experts in the Czech Republic, who would be able to design and implement AI systems into corporate infrastructure.

According to RSM, a local IT consulting firm, 48% of companies have the technical conditions for rapid implementation of AI, but the development is hindered by both managers and legislation. According to the analysis, specific challenges such as managers’ low willingness to bear the risks associated with pioneering phases of AI implementation, including legislative and security aspects (e.g., personal data protection), are obstacles. It may be difficult to agree across the company on how the corporate AI should work. Moreover, significant revisions of existing legislation and updates to the national AI strategy are needed, a process that is still in its early stages.

Some companies don’t have a clear idea on how to use AI to improve their processes or innovate products and services. This lack of awareness may lead to a lack of motivation for investment in AI technologies.

However, organisations should not resist this trend. In countries such as Japan and the US, AI is already widely used, including in autonomous taxis. Once Czech companies overcome their concerns and embrace AI as an essential part of their operations, they can enjoy higher efficiency, innovation, and a competitive advantage. There is hardly any company that cannot benefit from what AI has to offer, be it from small things such as data processing and analysis, to process automation, automated car control, to fully autonomous factory or shop floor operation.